An AI music generator is no longer a futuristic concept—it’s a practical, powerful technology reshaping how music is composed, produced, and distributed. From independent creators and YouTubers to game developers and marketing teams, AI-driven music systems are now embedded in modern digital workflows.

At its core, an AI music generator leverages machine learning models, neural networks, and large-scale datasets to create original music tracks in seconds. But it’s not just about speed. It’s about automation, personalization, and unlocking creativity in a digital-first world.

Let’s explore how this technology works, why it exists, and where it’s headed.

What Is an AI Music Generator?

An AI music generator is software powered by artificial intelligence that composes music algorithmically. Instead of manually writing melodies, harmonies, or drum patterns, users input prompts—such as genre, mood, tempo, or instruments—and the system produces a fully structured track.

Unlike traditional music production tools that require musical knowledge, AI music tools:

- Analyze patterns from massive music datasets

- Learn structures like chord progressions and rhythm patterns

- Generate new compositions based on trained models

- Adapt to user-defined parameters in real time

These systems rely heavily on deep learning, especially transformer-based neural networks similar to those used in large language models.

How Does an AI Music Generator Work?

Understanding the mechanism requires looking at three key technical layers:

1. Data Training Layer

AI models are trained on:

- Thousands (sometimes millions) of MIDI files

- Audio samples across genres

- Instrumental recordings

- Tempo and harmonic datasets

The system identifies statistical patterns in:

- Melody structure

- Chord progressions

- Rhythm timing

- Genre-specific arrangements

2. Model Architecture

Modern AI music generator platforms use:

- Recurrent Neural Networks (RNNs) for sequence prediction

- Transformers for long-range musical structure

- Generative Adversarial Networks (GANs) for audio synthesis

- Diffusion models for higher-quality waveform generation

These architectures allow AI to predict the next note, beat, or sound in a sequence with remarkable accuracy.

3. Output & Rendering Layer

Once a composition is generated:

- MIDI data is converted into digital audio

- Software instruments or synthesizers render sound

- Users can edit structure, layers, and instruments

In advanced systems, you can even generate vocals, lyrics, and multi-track arrangements.

Core Features of Modern AI Music Generators

Today’s platforms are far more sophisticated than early algorithmic composers. Modern AI systems offer:

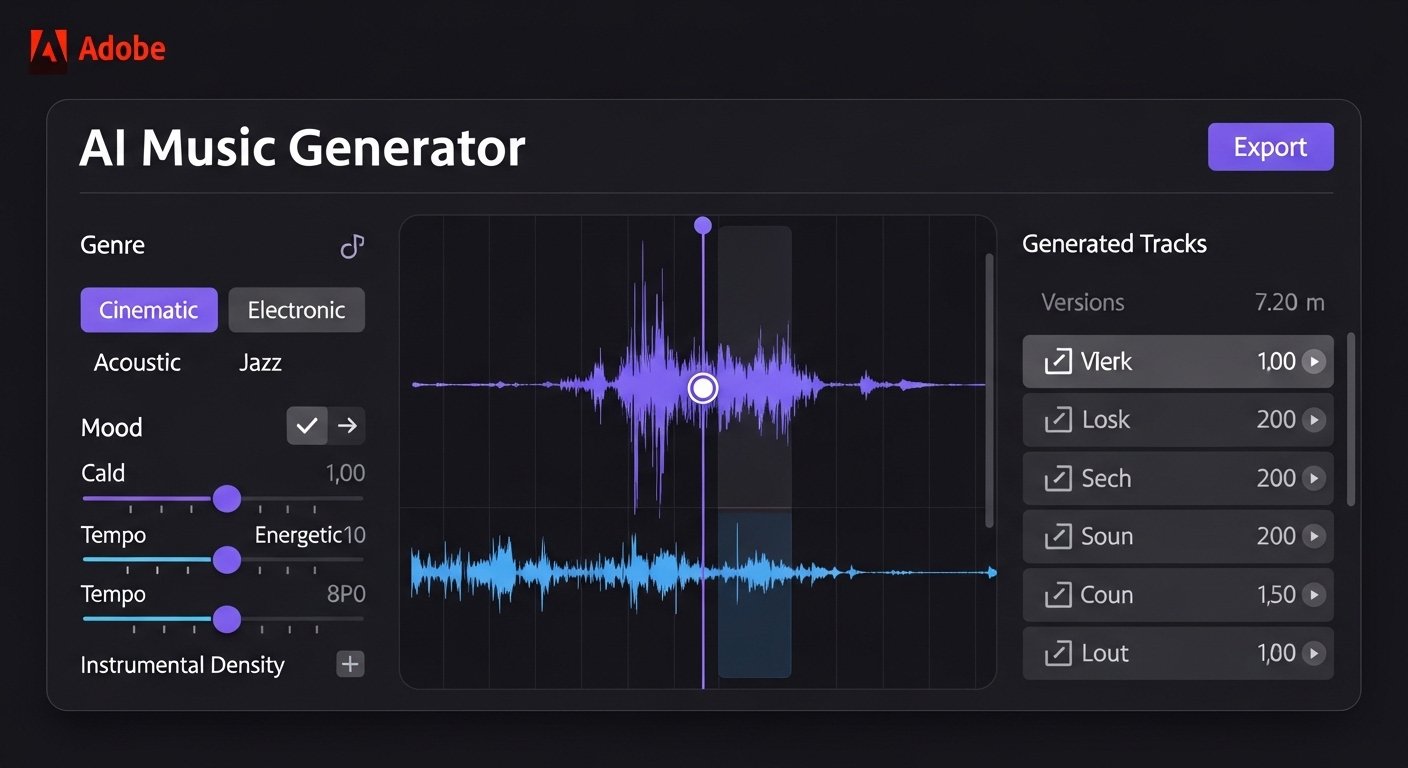

- Genre customization (pop, lo-fi, cinematic, EDM, orchestral)

- Mood control (happy, dark, energetic, calm)

- Tempo and BPM adjustments

- Real-time track editing

- Voice synthesis integration

- API access for developers

- Royalty-free licensing options

Some tools even integrate directly into digital audio workstations (DAWs), enabling hybrid AI-human workflows.

Real-World Applications

The rise of AI music generation is driven by practical demand.

🎬 1. Content Creation

YouTubers, podcasters, and TikTok creators need royalty-free background music quickly. AI systems generate unique tracks instantly—eliminating copyright risks.

🎮 2. Game Development

Dynamic soundtracks adapt in real time to gameplay conditions. AI-generated music can shift intensity based on player actions.

📱 3. Mobile Apps & SaaS Platforms

Meditation apps, productivity tools, and fitness platforms use AI to generate personalized soundscapes.

📢 4. Marketing & Advertising

Brands use AI-generated jingles tailored to specific campaigns, audiences, or demographics.

🎓 5. Education & Learning

Students use AI tools to understand music theory through automated composition examples.

AI Music Generator vs Traditional Music Production

| Feature | AI Music Generator | Traditional Production |

| Speed | Seconds to minutes | Hours to days |

| Skill Requirement | Minimal | High |

| Cost | Low subscription | Studio + equipment costs |

| Customization | Prompt-based | Manual composition |

| Human Emotion | Algorithmic | Fully human-driven |

Key difference: AI focuses on automation and scalability, while traditional production emphasizes artistic nuance.

Benefits of AI Music Generator Technology

Here are the most impactful advantages:

1. Accessibility

Anyone—regardless of musical background—can create soundtracks.

2. Cost Efficiency

Startups and small creators avoid expensive licensing fees.

3. Rapid Prototyping

Film and game developers can generate multiple versions instantly.

4. Personalization

AI adapts music to user mood, biometric data, or behavioral signals.

5. Scalability

Enterprises can generate thousands of unique audio variations programmatically.

Limitations and Challenges

Despite the innovation, AI music systems are not perfect.

- Emotional depth limitations – AI mimics patterns but may lack human storytelling nuance.

- Copyright concerns – Training data transparency is still debated.

- Risk of generic outputs if prompts are vague.

- Ethical questions around replacing human composers.

For now, the strongest approach is AI-assisted creation, not full replacement.

Is an AI Music Generator Safe and Reliable?

In terms of cybersecurity and reliability:

- Most reputable platforms use encrypted cloud infrastructure.

- Royalty-free licensing models reduce legal risk.

- Generated music does not “copy” a single song but statistically recombines patterns.

However, businesses should:

- Review platform licensing agreements

- Understand commercial usage rights

- Avoid uploading proprietary audio without policy review

Why This Technology Exists

The digital economy demands scalable content creation.

Streaming platforms, short-form video apps, and virtual reality environments require massive amounts of audio. Human-only production cannot meet this scale efficiently.

AI music generators solve three major problems:

- Speed

- Cost

- Customization

This aligns with broader automation trends seen in AI writing tools, image generation systems, and code assistants.

Future of AI Music Generation

The next phase of innovation is already underway.

🔮 Emerging Trends

- Real-time adaptive soundtracks using biometric sensors

- Fully AI-generated virtual artists

- AI + blockchain licensing systems

- Emotion-detection music engines

- Integration into AR/VR environments

As hardware improves—especially GPUs and AI accelerators—audio generation quality will continue to rise.

In my perspective, the future isn’t AI replacing musicians. It’s AI becoming a creative co-pilot, accelerating ideation and reducing technical friction.

Frequently Asked Questions (FAQs)

1. What is an AI music generator in technology?

An AI music generator is software that uses machine learning algorithms to automatically compose original music based on user inputs like genre, tempo, or mood.

2. How does an AI music generator work?

It trains on large music datasets, learns patterns using neural networks, and generates new compositions by predicting musical sequences.

3. Is an AI music generator safe and legal to use?

Most reputable platforms provide royalty-free licenses, making them safe for commercial use. Always verify licensing terms before publishing.

4. Who should use an AI music generator?

Content creators, marketers, game developers, educators, startups, and independent artists can benefit from fast, cost-effective music production.

5. Can AI music replace human composers?

Not entirely. While AI accelerates production and generates structured compositions, human creativity and emotional storytelling remain unique strengths.

6. What are the latest developments in AI music generation?

Recent advances include transformer-based audio models, diffusion-based sound synthesis, and real-time adaptive music engines for interactive environments.

Conclusion: The Sound of the Digital Future

The AI music generator represents a major shift in how sound is created, distributed, and consumed in the digital age. By combining machine learning, automation, and scalable cloud infrastructure, this technology democratizes music production while accelerating creative workflows.

It is not about replacing musicians—it’s about empowering creators with intelligent tools.